Generative design, manufacturing, and molecular modeling of 3D architected materials based on natural language input

Abstract

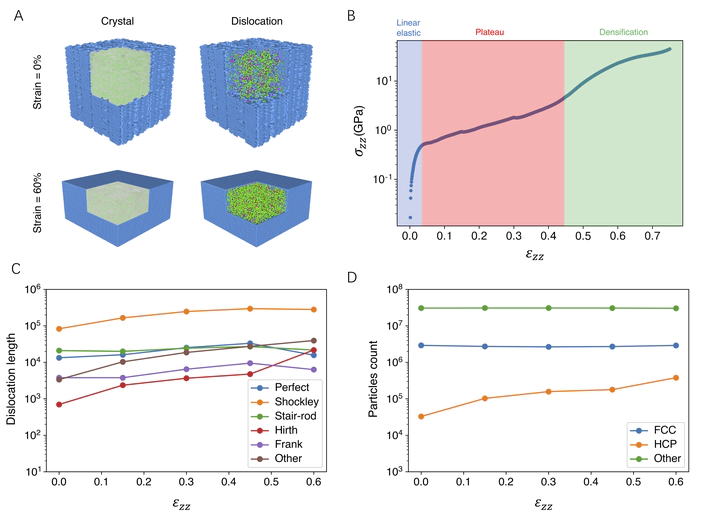

We describe a method to generate 3D architected materials based on mathematically parameterized human readable word input, offering a direct materialization of language. Our method uses a combination of a vector quantized generative adversarial network and contrastive language-image pre-training neural networks to generate images, which are translated into 3D architectures that are then 3D printed using fused deposition modeling into materials with varying rigidity. The novel materials are further analyzed in a metallic realization as an aluminum-based nano-architecture, using molecular dynamics modeling and thereby providing mechanistic insights into the physical behavior of the material under extreme compressive loading. This work offers a novel way to design, understand, and manufacture 3D architected materials designed from mathematically parameterized language input. Our work features, at its core, a generally applicable algorithm that transforms any 2D image data into hierarchical fully tileable, periodic architected materials. This method can have broader applications beyond language-based materials design and can render other avenues for the analysis and manufacturing of architected materials, including microstructure gradients through parametric modeling. As an emerging field, language-based design approaches can have a profound impact on end-to-end design environments and drive a new understanding of physical phenomena that intersect directly with human language and creativity. It may also be used to exploit information mined from diverse and complex databases and data sources.